Chosen Research Projects Showcase Significant Advances by Worldwide Scholars

CHICAGO — (BUSINESS WIRE) — May 29, 2018 — SIGGRAPH 2018, the world’s leading annual interdisciplinary educational event showcasing the latest in computer graphics and interactive techniques, will present 128 cutting-edge Technical Papers from around the world, showcasing significant, scholarly new work. The 45th SIGGRAPH conference will take place 12–16 August at the Vancouver Convention Centre. To register for the conference, visit s2018.SIGGRAPH.org.

This press release features multimedia. View the full release here: https://www.businesswire.com/news/home/20180529006099/en/

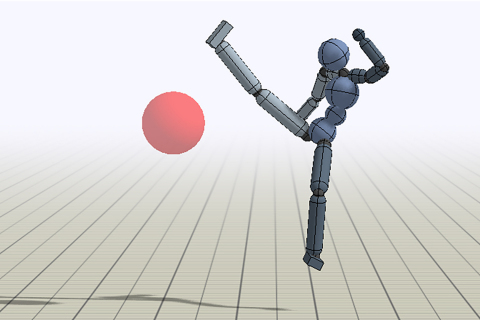

“DeepMimic: Example-Guided Deep Reinforcement Learning of Physics-Based Character Skills” © 2018 University of California, Berkeley and University of British Columbia (Graphic: Business Wire)

SIGGRAPH 2018 Technical Papers were chosen through a rigorous peer-review process by a prestigious international jury of scholars and scientists. Each chosen paper adheres to the highest scientific standards and will be published in a special issue of ACM Transactions on Graphics. In addition to papers selected by the conference jury, select papers that have been published in ACM Transactions on Graphics during the past year will also be showcased in Vancouver.

“Vancouver is about to be brimming with the who’s-who of graphics. Be they students, engineers, or professors, everybody with a passion for tech and graphics converges to the SIGGRAPH conference to learn about the most innovative and novel ideas in the field. The technical papers, in particular, are always trend-setting,” said Mathieu Desbrun, SIGGRAPH 2018 Technical Papers Chair and the Carl Braun Professor at the California Institute of Technology.

Highlights of this year’s Technical Papers program include:

Looking to Listen at the Cocktail Party: A Speaker-Independent Audio-Visual Model for Speech Separation [Israel, U.S.]

Authors: Ariel Ephrat, Google Inc., Hebrew University of Jerusalem; Inbar Mosseri, Oran Lang, Tali Dekel, Kevin Wilson, Avinatan Hassidim, and Michael Rubinstein, Google Inc.; and, William Freeman, Google Inc., Massachusetts Institute of Technology (MIT)

A trained, machine-learning model is presented, utilizing both the visual and auditory signals of an input video to separate the speech of different speakers in the video. ( link)

Mode-Adaptive Neural Networks for Quadruped Motion Control [United Kingdom, U.S.]

Authors: He Zhang, Sebastian Starke, and Taku Komura, University of Edinburgh; and, Jun Saito, Adobe Research

This paper proposes a data-driven approach for animating quadruped motion. The novel architecture, called Mode-Adaptive Neural Networks, can learn a wide range of locomotion modes and non-cyclic actions. ( link)

Skaterbots: Optimization-based Design and Motion Synthesis for Robotic Creatures with Legs and Wheels [Switzerland, U.S., Canada]

Authors: Moritz Geilinger, Roi Poranne, and Stelian Coros, ETH Zurich, Department of Computer Science; Ruta Desai, Carnegie Mellon University; and, Bernhard Thomaszewski, Université de Montréal

“Skaterbots” researchers propose a computation-driven approach to design optimization and motion synthesis for robotic creatures that locomote using arbitrary arrangements of legs and wheels. ( link)

DeepMimic: Example-Guided Deep Reinforcement Learning of Physics-Based Character Skills [U.S., Canada]

Authors: Xue Bin Peng, Pieter Abbeel, and Sergey Levine, University of California, Berkeley; and, Michiel van de Panne, University of British Columbia

This paper presents a deep reinforcement learning framework that enables simulated characters to imitate a rich repertoire of highly dynamic and acrobatic skills from reference motion clips. ( link)

Automatic Machine Knitting of 3D Meshes [U.S., Switzerland]

Authors: Vidya Narayanan, Lea Albaugh, Jessica Hodgins, and James McCann, Carnegie Mellon University; and, Stelian Coros, ETH Zurich, Carnegie Mellon University

“Automatic” researchers present the first computational approach that can transform 3D meshes, created by traditional modeling programs, into instructions for a computer-controlled knitting machine. ( link)

Instant 3D Photography [United Kingdom, U.S.]

Authors: Peter Hedman, University College London; Johannes Kopf, Facebook

In less than 60 seconds, the authors turn color-and-depth images from a dual-lens camera phone into a highly detailed 3D panorama, which can be viewed with head motion parallax in VR. ( link)

Deep Video Portraits [Germany, France, United Kingdom, U.S.]

Authors: Hyeongwoo Kim, Ayush Tewari, Weipeng Xu, and Christian

Theobalt, Max Planck Institute for Informatics; Pablo Garrido and

Patrick Perez, Technicolor; Justus Thies and Matthias Niessner,

Technical University of Munich; Christian Richardt, University of Bath;

and, Michael Zollhöfer, Stanford University